・商品名:マルチドライブ キッズコンピュータ ピコ

・メーカー:SEGA Yonezawa

・サイズ(約):奥行き 21cm 横 36cm 高さ 13cm

・商品名:マルチドライブ キッズコンピュータ ピコ

・メーカー:SEGA Yonezawa

・サイズ(約):奥行き 21cm 横 36cm 高さ 13cm

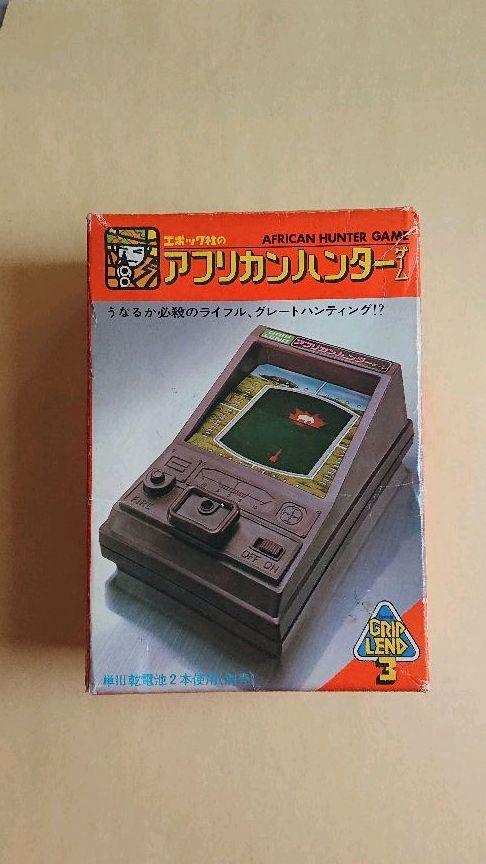

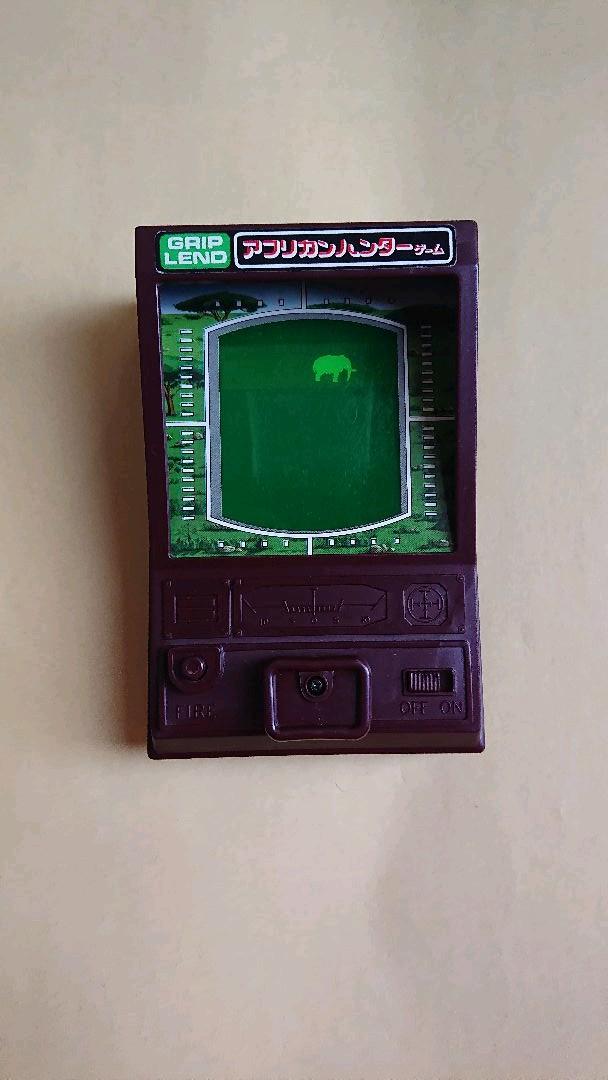

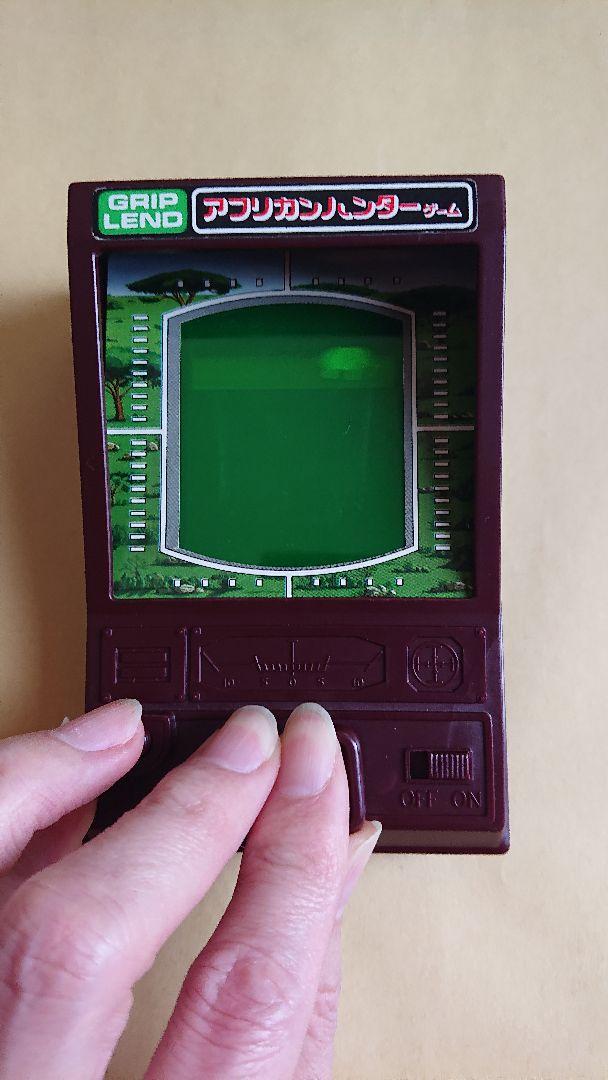

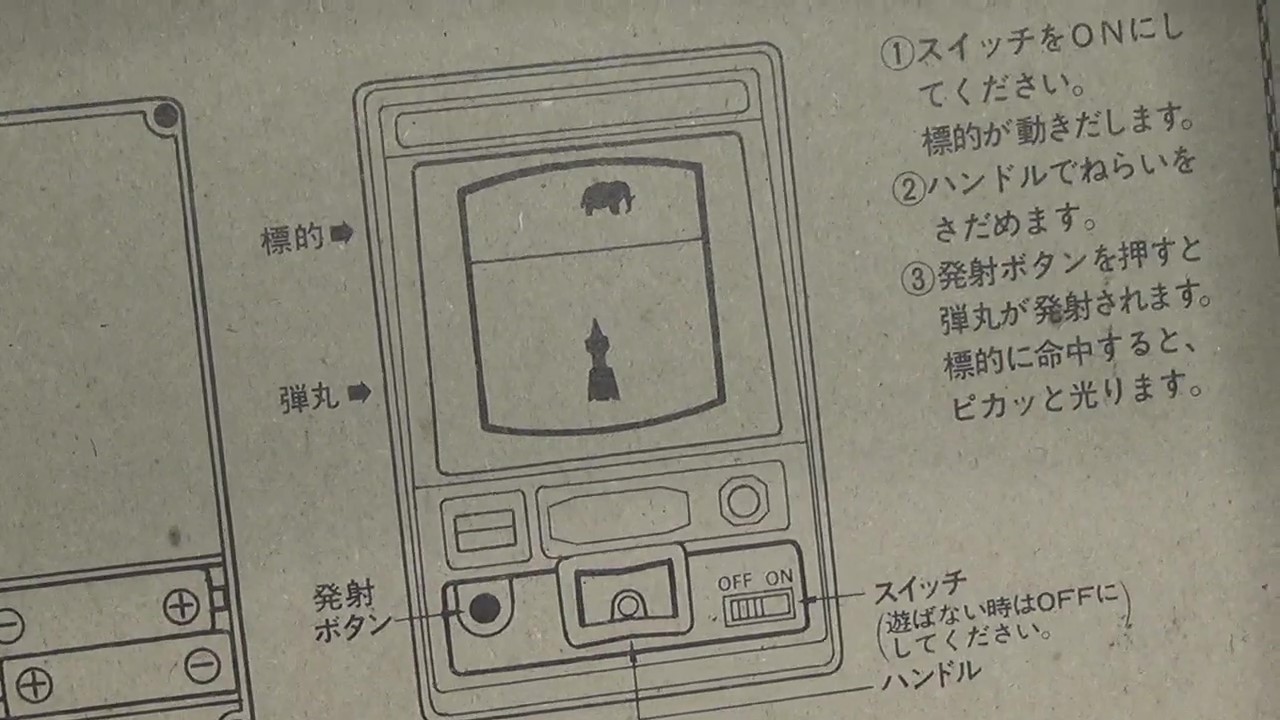

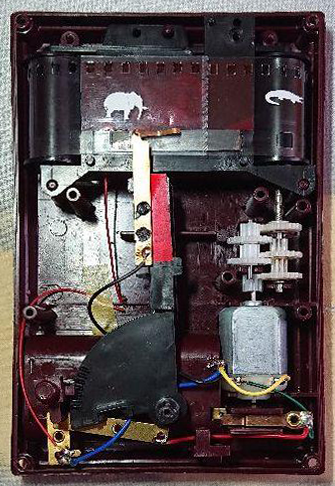

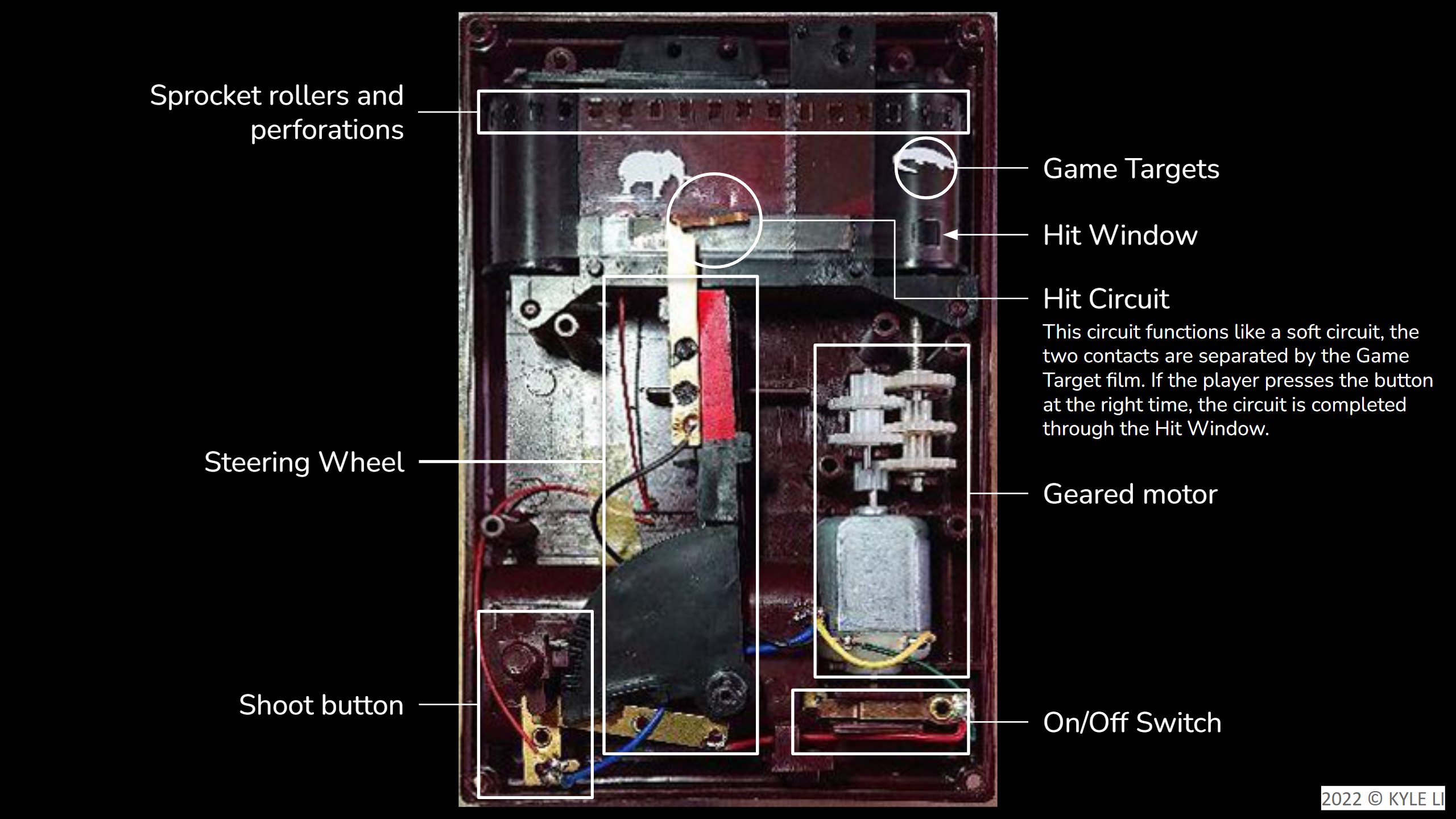

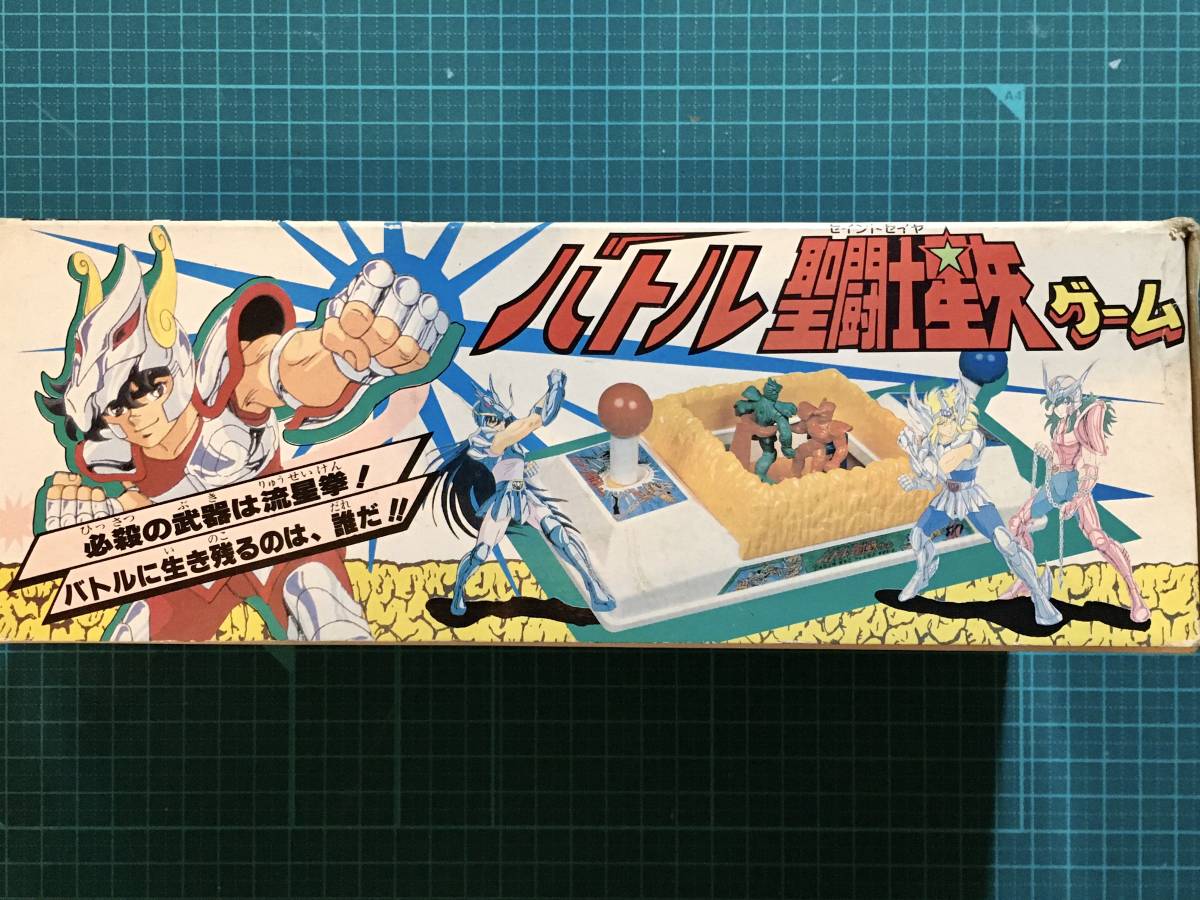

African Hunter Game by EPOCH (エポック) 1980

https://jp.mercari.com/item/m47878031739

What this animated GIF didn’t capture was the loud geared motor sound.

【[課程教學使用: TNA] African Hunter Game】 https://youtu.be/6oeCnEdjEBc

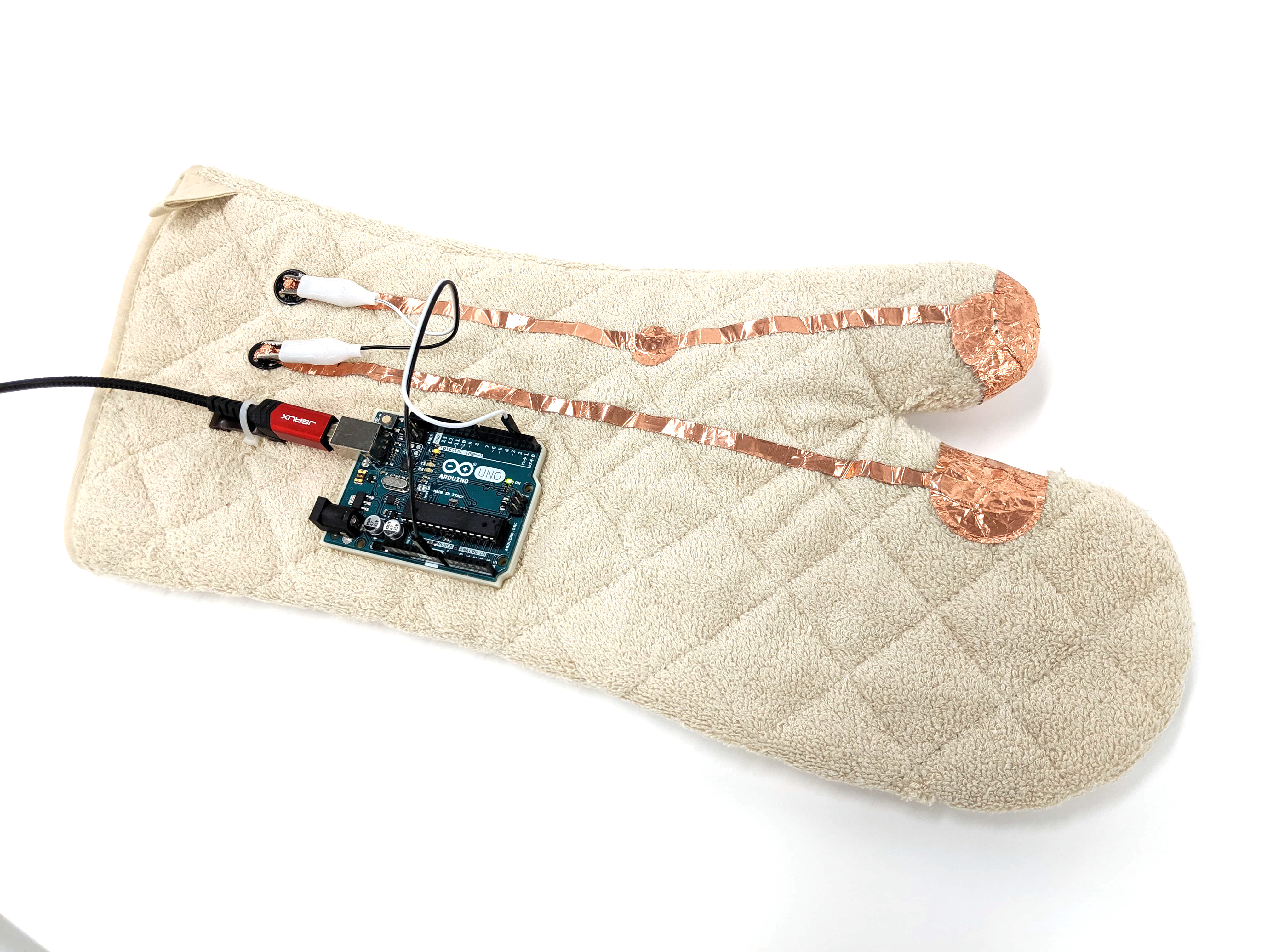

After 11 years as an in-person, classroom-bond, hands-on, and expert-at-improvising type of teacher, I was forced to adapt online teaching because of COVID. In the beginning, I was very frustrated that I was not be able to create the same kind of learning engagement online than off-line. I soon realized that I shouldn’t compare them at all, because they are two very different learning spaces. Instead of taking it for granted, I started to look at online teaching as an pot-of-comfort-zone learning opportunity for myself.

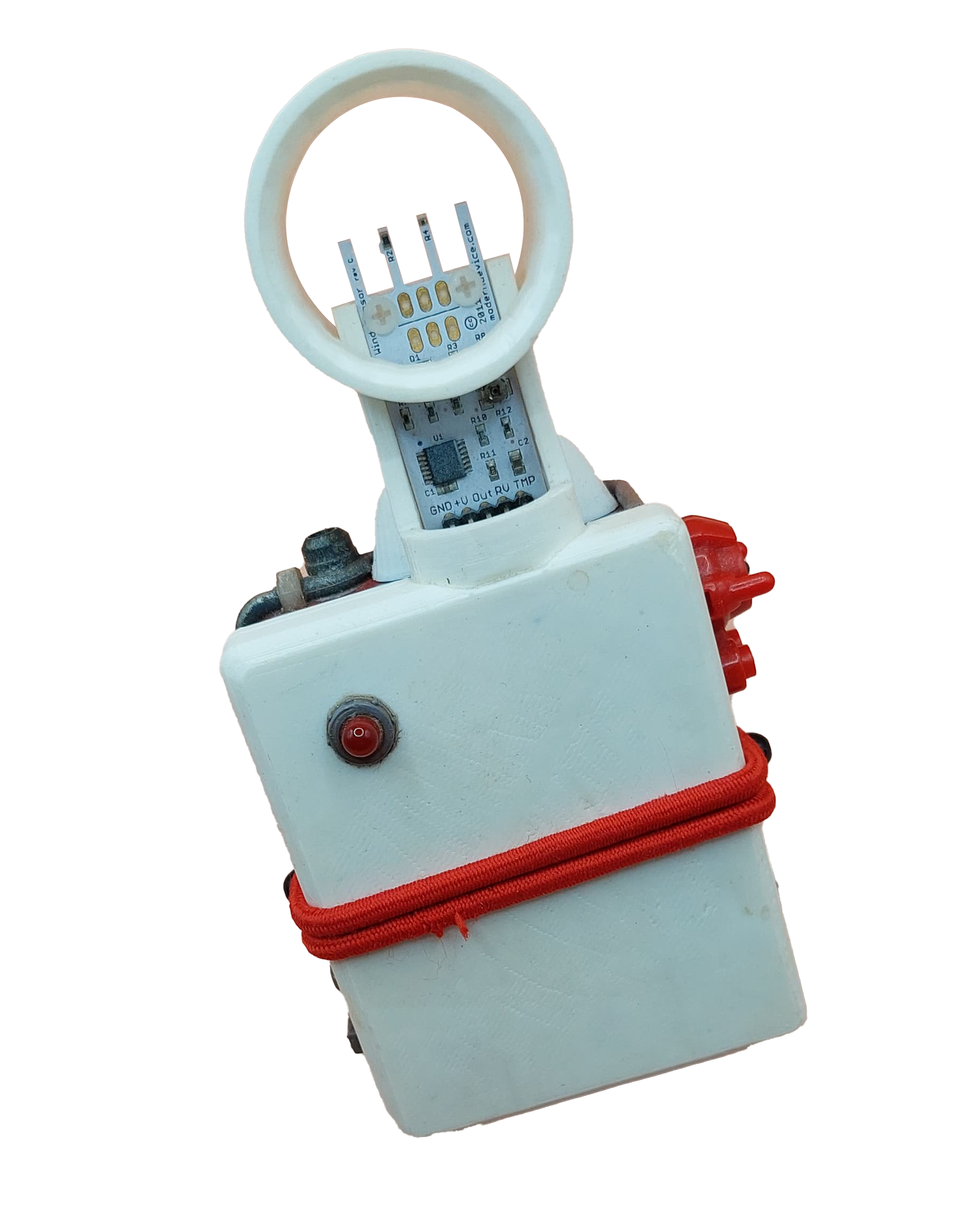

A Digital Native’s Playset is a collection of Arduino and IoT enabled physical gadgets that enables users to enrich their virtual interactions with others. Kyle Li has been engaged with online learning as a teacher for the past three years. While it was an unexpected change in Kyle’s academic career, he was introduced to a new array of design opportunities when it comes to online and virtual communication. A Digital Native’s Playset is the result of Kyle’s attempt to push virtual interaction beyond keyboard and mouse.

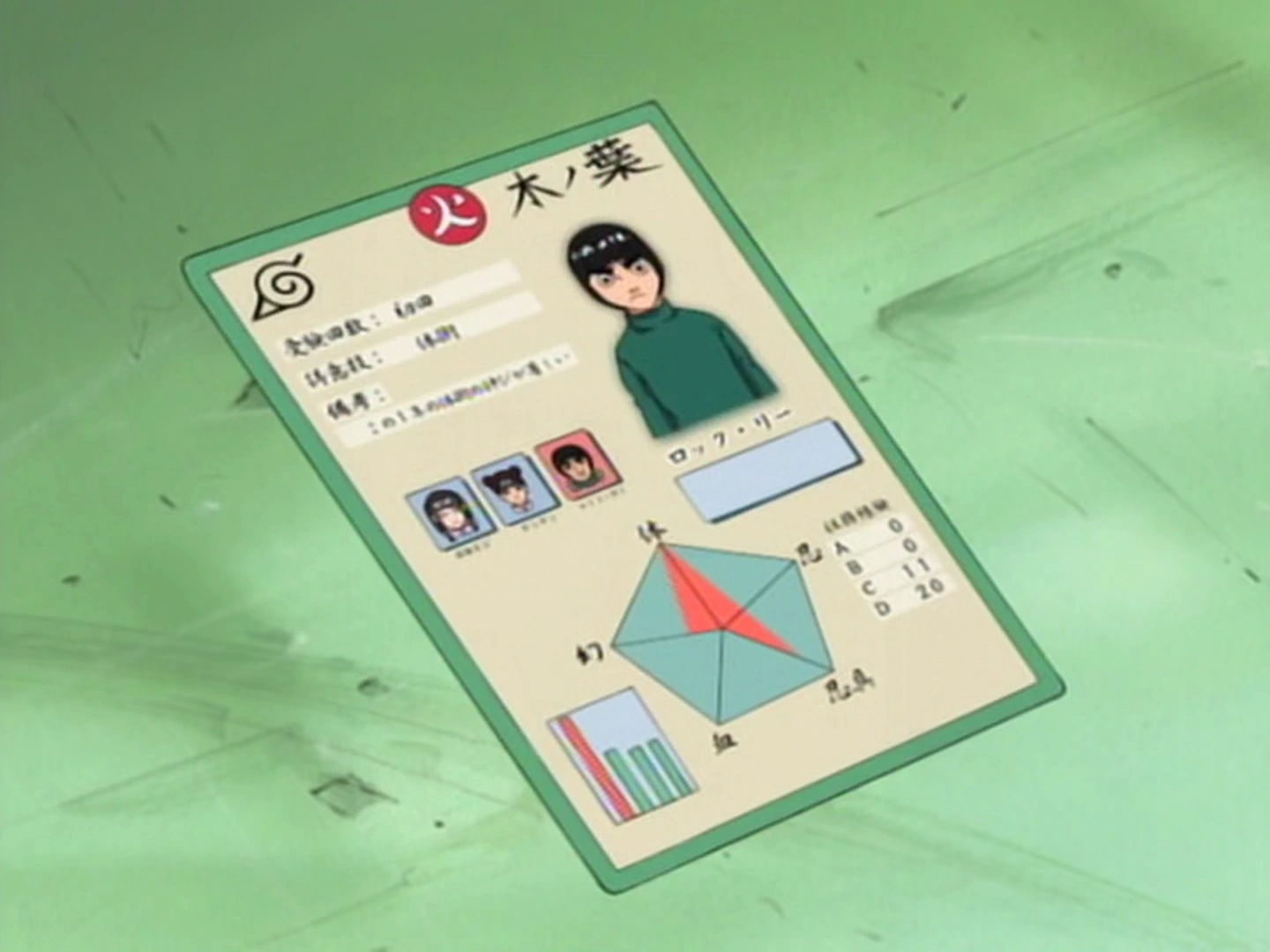

(Re)watched episode 23 today, I wonder why this didn’t go viral as a form of collectable cards for Naruto Characters. There are various adaptations of the name “忍識札” or “Ninja Info Card” in Naruto games but the designs were completely different. The original design was the best because, in my opinion, most informative.

https://naruto.fandom.com/wiki/Ninja_Info_Cards

https://www.deviantart.com/shadow-of-otogakure/art/Ninja-Info-Card-Template-Leaf-Village-898316346

Since August 2022, Ready Player Me no longer supports exporting avatars as FBX files. It’s still possible to convert a GLB avatar file to FBX manually using Blender.

https://docs.readyplayer.me/ready-player-me/integration-guides/unreal-sdk/blender-to-unreal-export

This is super exciting!!

GoDice JavaScript API: https://github.com/ParticulaCode/GoDiceJavaScriptAPI

GoDice Unity Demo: https://github.com/ParticulaCode/GoDiceUnityDemo

Python API: https://github.com/ParticulaCode/GoDicePythonAPI

https://styly.cc/ja/private/how-to-create-a-location-marker/

https://styly.cc/ja/mobile/?location={ロケーションID}

https://styly.cc/ja/mobile/?location=d83f83ae-af5a-4480-bd3a-9fa6e40cf5fe

https://styly.cc/ja/mobile/?location={ロケーションGUID}&size={印刷時のサイズ}

https://styly.cc/ja/mobile/?location=d83f83ae-af5a-4480-bd3a-9fa6e40cf5fe&size=0.05

(5cm x 5cm)

https://www.tagindex.com/tool/url.html

エンコード後

https%3A%2F%2Fstyly.cc%2Fja%2Fmobile%2F%3Flocation%3Dd83f83ae-af5a-4480-bd3a-9fa6e40cf5fe%26size%3D0.05

https://stylymr.page.link/?link=https://STYLY_REPLACE&apn=com.psychicvrlab.stylymr&isi=1477168256&ibi=com.psychicvrlab.stylymr

https://stylymr.page.link/?link= https%3A%2F%2Fstyly.cc%2Fja%2Fmobile%2F%3Flocation%3Dd83f83ae-af5a-4480-bd3a-9fa6e40cf5fe%26size%3D0.05&apn=com.psychicvrlab.stylymr&isi=1477168256&ibi=com.psychicvrlab.stylymr

https://qr.quel.jp/form_bsc_msg.php

https://drive.google.com/file/d/1hGpowVQjRLTKsoMqtMt6YpAaPvZFj7RT/view?usp=sharing