I recently participated in the Smart Craft Studio camp and spent 3 weeks in Hida Japan for a series of intensive wood joinery and internet of things(IoT) workshops. During one of the IoT workshop, Shingeru Kobayashi sensei, the creator of Arduino Fio, walked us through how to set our Raspberry up with Amazon Alexa. It was really exciting when all of us heard Alexa’s greeting for the first time.

“I am interested in collaborating with Alexa on something”, I said it to myself. The first thing that came to mind was King Curtis’s Memphis Soul Stew. I was wondering if we (me & Alexa) can build something up together visually like how King Curtis built up the intro in Memphis Soul Stew.

Alexa is on IFTTT. The first pipeline I thought of is Alexa to Google Drive to Unity.

It works, but not ideal, here is why. I quickly loaded an demo scene and hided all the Game Object by setting their mesh renderer to false. By saying specific sentence to Alexa, a new row will be added to a spreadsheet on Google Drive. Then Unity will show Game Objects based on the data in the CVS file. The CVS file doesn’t get updated quick enough. With the current setup, going from the verbal commend to IFTTT to Google Drive to Unity takes about 30 secs. This is not ideal… The wait time is too long.

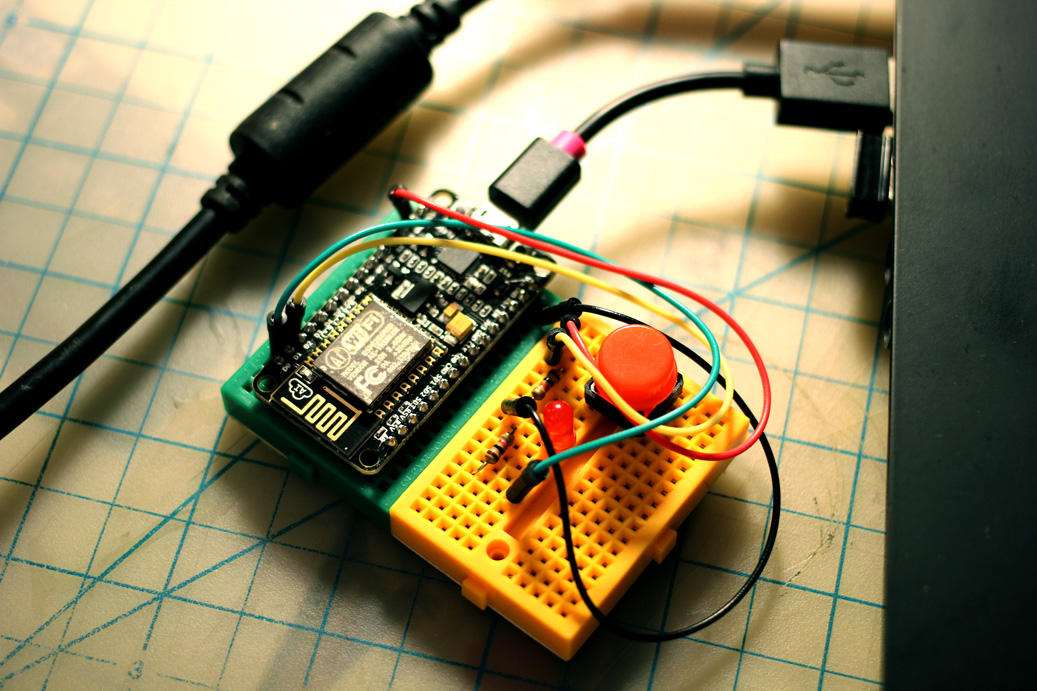

The next pipeline I am going to try is 8266 with IFTTT’s Maker Channel + beebotte. It will be faster, theoretically speaking….. And It did, after testing. However, it doesn’t feel like we are collaborating… We are more like Tony Stark and Jarvis where I am giving some kind of formatted language/verbal instructions, and Jarvis execute them…