This is super exciting!!

GoDice JavaScript API: https://github.com/ParticulaCode/GoDiceJavaScriptAPI

GoDice Unity Demo: https://github.com/ParticulaCode/GoDiceUnityDemo

Python API: https://github.com/ParticulaCode/GoDicePythonAPI

Author: admin

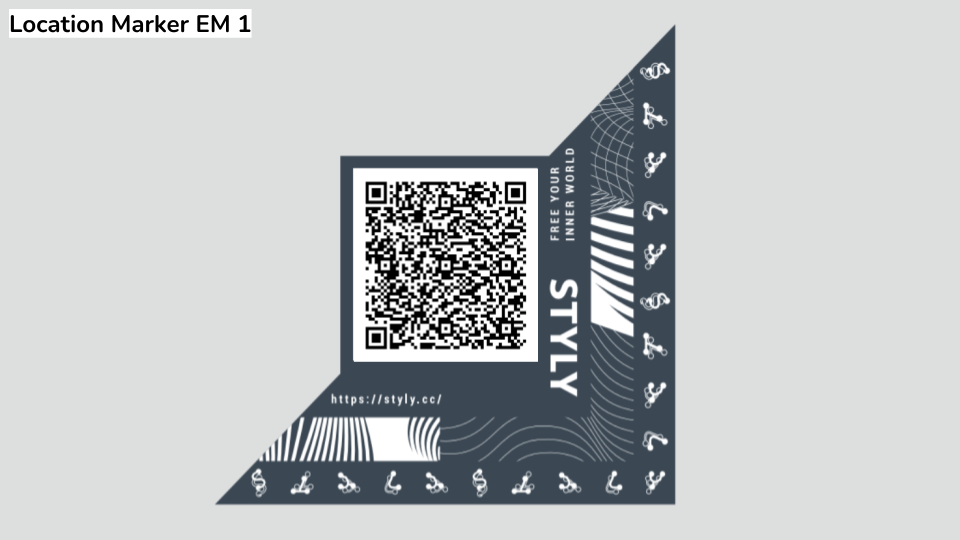

STYLY LOCATION MARKER

Location AR Marker:

https://styly.cc/ja/private/how-to-create-a-location-marker/

STEP 1:

https://styly.cc/ja/mobile/?location={ロケーションID}

https://styly.cc/ja/mobile/?location=d83f83ae-af5a-4480-bd3a-9fa6e40cf5fe

STEP 2:

https://styly.cc/ja/mobile/?location={ロケーションGUID}&size={印刷時のサイズ}

https://styly.cc/ja/mobile/?location=d83f83ae-af5a-4480-bd3a-9fa6e40cf5fe&size=0.05

(5cm x 5cm)

STEP 3:

https://www.tagindex.com/tool/url.html

エンコード後

https%3A%2F%2Fstyly.cc%2Fja%2Fmobile%2F%3Flocation%3Dd83f83ae-af5a-4480-bd3a-9fa6e40cf5fe%26size%3D0.05

STEP 4:

https://stylymr.page.link/?link=https://STYLY_REPLACE&apn=com.psychicvrlab.stylymr&isi=1477168256&ibi=com.psychicvrlab.stylymr

https://stylymr.page.link/?link= https%3A%2F%2Fstyly.cc%2Fja%2Fmobile%2F%3Flocation%3Dd83f83ae-af5a-4480-bd3a-9fa6e40cf5fe%26size%3D0.05&apn=com.psychicvrlab.stylymr&isi=1477168256&ibi=com.psychicvrlab.stylymr

https://qr.quel.jp/form_bsc_msg.php

STEP 5:

https://drive.google.com/file/d/1hGpowVQjRLTKsoMqtMt6YpAaPvZFj7RT/view?usp=sharing

Midjourney

cyber punk hologram futuristic city in anime style

JoJo’s Bizarre Adventure meets hello kitty in gotham city

keith haring cyber punk

AI Robot Characters

AI Robot Characters

AI Robot Characters

utopian place where carebears and pokemons enjoying feasts, light shines from top

utopian place where carebears and pokemons enjoying feasts, light shines from top

cheese pizza with cheesy crust

cheese pizza with cheesy crust

AI Robot Characters

ai robot anime character

kamen rider, ironman, captain america

rusty cyberpunk implants in body and brain in industrial genre

rusty cyberpunk implants in body and brain in industrial genre

dark forest creatures

And I saw a beast rise out of the sea, hauing seuen heads, and ten hornes, and vpon his hornes were ten crownes, and vpon his heads the name of blasphemie.

YAMI HUNTER AR

Traveling between parallel universes has become more frequent in the recent years. Based on our research, the excessive and abnormal energy left behind of a jump between two universes attracts an exterritorial creature called YAMI who usually found in the void between dimensions. It was first discovered by our agent in Japan, hence the name, YAMI are generally not harmful to humans. However, the various energy they digested including ones that were from other universes might cause temporary imbalance which could lead to potential disasters. Your mission is to survey the area for YAMI and send them back to the void with our handheld device.

The alternative controller:

This is inspired by one of my favorite handheld electronic game called Treasure Gausts (トレジャーガウスト) and I thought it will work nicely as an AR experience on smart phones. I built a quick demo which allows the player to follow and capture a YAMI.

Demo Video: https://www.instagram.com/p/CgIHxNNAjcM/

STYLY demo: https://gallery.styly.cc/scene/0835a582-33fe-4413-bcd2-53e33a2f13c7

Now I want more game mechanics than just tapping on the phone screen.

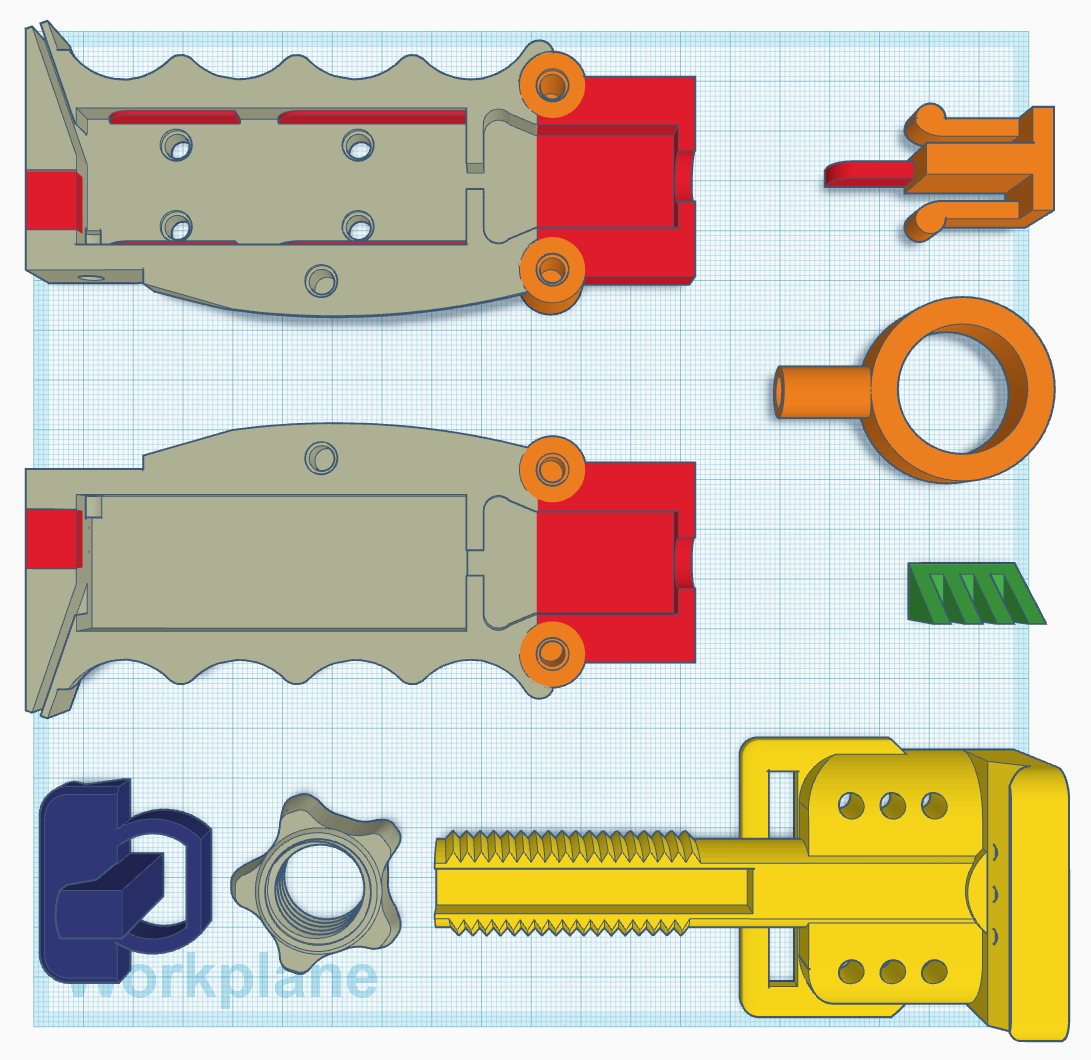

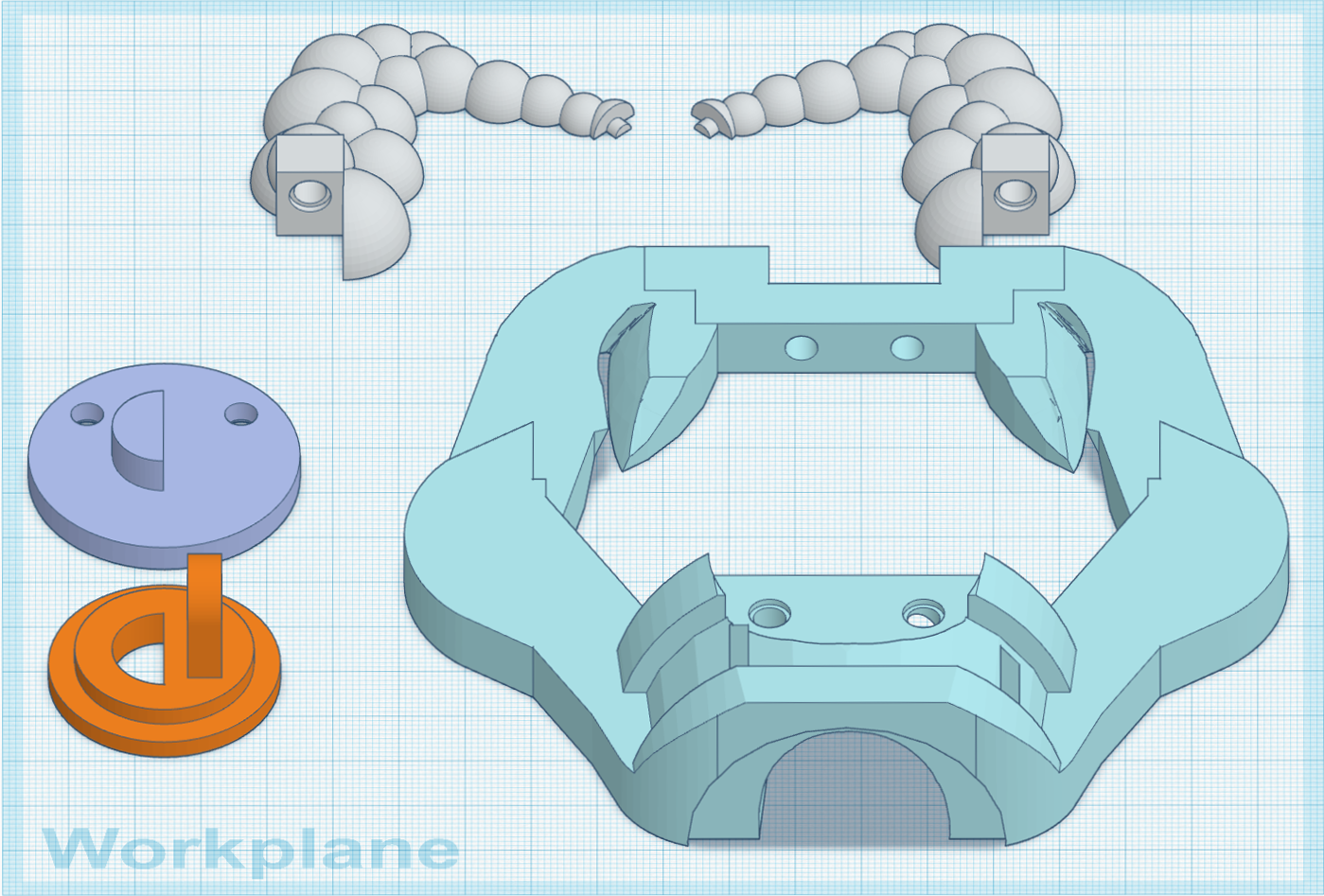

After a few quick sketches, I went on to Thingiverse to look for a smart phone mount. I started out by modifying jakejake’s Universal Phone Tripod Mount (https://www.thingiverse.com/thing:2423960). The design of this mount is brilliant, and it holds up pretty well. I then built out the rest of device piece by piece. I wanted some kind of switch at the bottom of this device in order for the player to “send YAMIs back to the void”, like an action that the player can do to initial the send back. This reminds me of the Tenketsu (天穴, Heavenly hole) in the anime Kekkaishi (結界師).

I created a ring like contraption at the bottom of the grip. When a giest is weaken, the player pulls down the ring to initiate the interdimensional suction. For the rest of the inputs, I had originally wanted to use a Dual Button unit, but I found out they shared the same pin (GPIO36) with OP 90 unity on M5 FIRE.

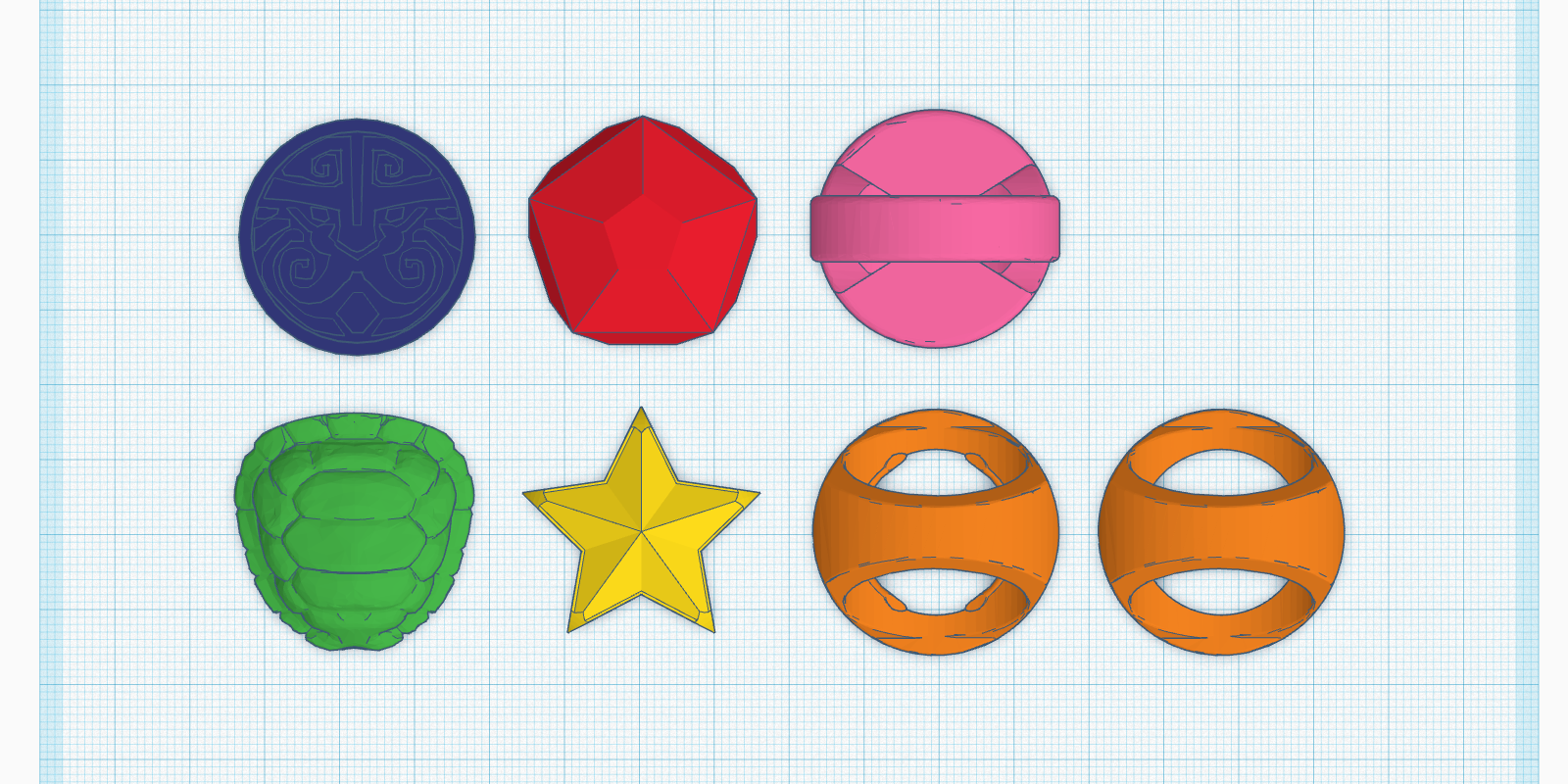

The other game mechanic that I wanted to add to the controller is spell casting. I want magic rings! I quickly prototype some wearable rings with RFID embedded. The player has to choose which ring to use during the capture.

Development notes:

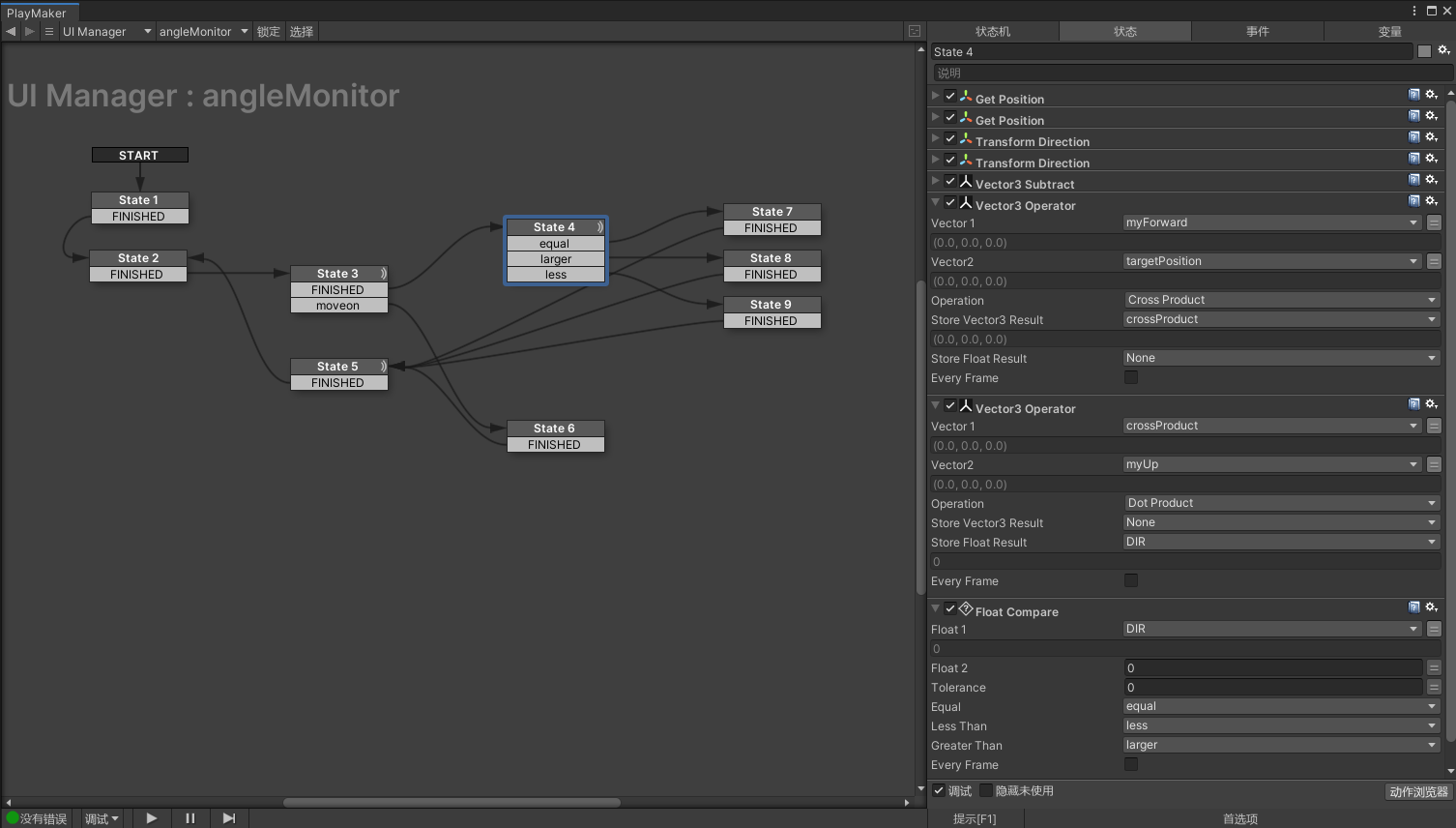

Left or Right of the forward vector:

This is one of those topics that sounds pretty simple at first but it take some advanced vector math to figure it out. The original solution was found here written in C#:

https://forum.unity.com/threads/left-right-test-function.31420/

using UnityEngine; using System.Collections; public class LeftRightTest : MonoBehaviour { public Transform target; public float dirNum; void Update () { Vector3 heading = target.position – transform.position; dirNum = AngleDir(transform.forward, heading, transform.up); } float AngleDir(Vector3 fwd, Vector3 targetDir, Vector3 up) { Vector3 perp = Vector3.Cross(fwd, targetDir); float dir = Vector3.Dot(perp, up); if (dir > 0f) { return 1f; } else if (dir < 0f) { return -1f; } else { return 0f; } } }I translated it line by line using Playmaker and it worked like magic.

Looping Audio in Playmaker:

Another one that sounds easy but takes some very specific steps to make it work in Playmaker. The best answer is from this thread:

https://hutonggames.com/playmakerforum/index.php?topic=5428.0

Genieless Lamp

eKids Genie Lamp Speaker Gold

The big idea is to modified this toy lamp toy into an alternative controller. There are four hexagon shaped LED covers on each side of the lamp. After a quick autopsy, these covers can be easily turned into touch buttons which are perfect for simulating the back and forth lamp rubbing actions. I will be using M5 Stack + MPR121(Touch Sensor Grove Platform Evaluation Expansion Board) + our HID Input Framework for xR to prototype this experience.

In order to be tracked in VR, I have to find a way to mount the touch controller on the lamp as well. After some rapid prototypes, I decided to mount the touch controller on top and M5 Stack on the bottom of the lamp. I also imagine the HTC VIVE tracker will be a great option for its compact form factor, but I try to keep the controller wireless.

lo-fi Demo video: https://www.instagram.com/p/CeZ-mPiMIuT/

I am working on the gameplay for the directional rubbing mechanic which allows the player to blow out (rub outward), suck in game objects (rub inward), or casting/summoning (rub back-and-forth).

Card Swipe

The way we play digital games is secretly influenced by the advancement of the technology. While new technology inspired new play mechanics, obsolete technology also take away play mechanics we took for granted. One of the better known examples happened in early 2000 when TV technology transitioned from CRT (Cathode Ray Tube) to LCD. This advancement killed off the light gun genre in its entirety because the traditional light-gun technology requires CRT to position the light gun pointer on the TV screen. This tragic loss on mainstream consoles didn’t resolve till 2007 when Nintendo Wiimote came out.

The subject of this post is another example – barcode battler. When it comes to scanning linear barcodes, the card swiping action is the coolest! Recently, barcode related interactions are done with either a build-in camera or a hand-held barcode scanner. The card swiping action is gone!!

QRE1113

QRE1113 IR Reflective Photo Interrupter features an easy-to-use analog output, which will vary depending on the amount of IR light reflected back to the sensor. The QRE1113 is comprised of two parts – an IR emitting LED and an IR sensitive phototransistor. When you apply power to the VCC and GND pins the IR LED inside the sensor will illuminate. Because dark colors will bounce back less light, the sensor can be used to tell the difference between white and black areas and can be used in robots as a line follower.

https://www.newark.com/on-semiconductor/qre1113gr/object-sensor-phototransistor/dp/34C1691

https://www.ebay.com/itm/144367101969

https://www.mouser.com/ProductDetail/onsemi-Fairchild/QRE1113

ROB-09453

https://www.digikey.com/en/products/detail/sparkfun-electronics/ROB-09453/5762422

https://www.sparkfun.com/products/9453

ROB-09454

https://www.digikey.com/en/products/detail/sparkfun-electronics/ROB-09454/5725749

QRE1113GR

ROB-09453

ROB-09454

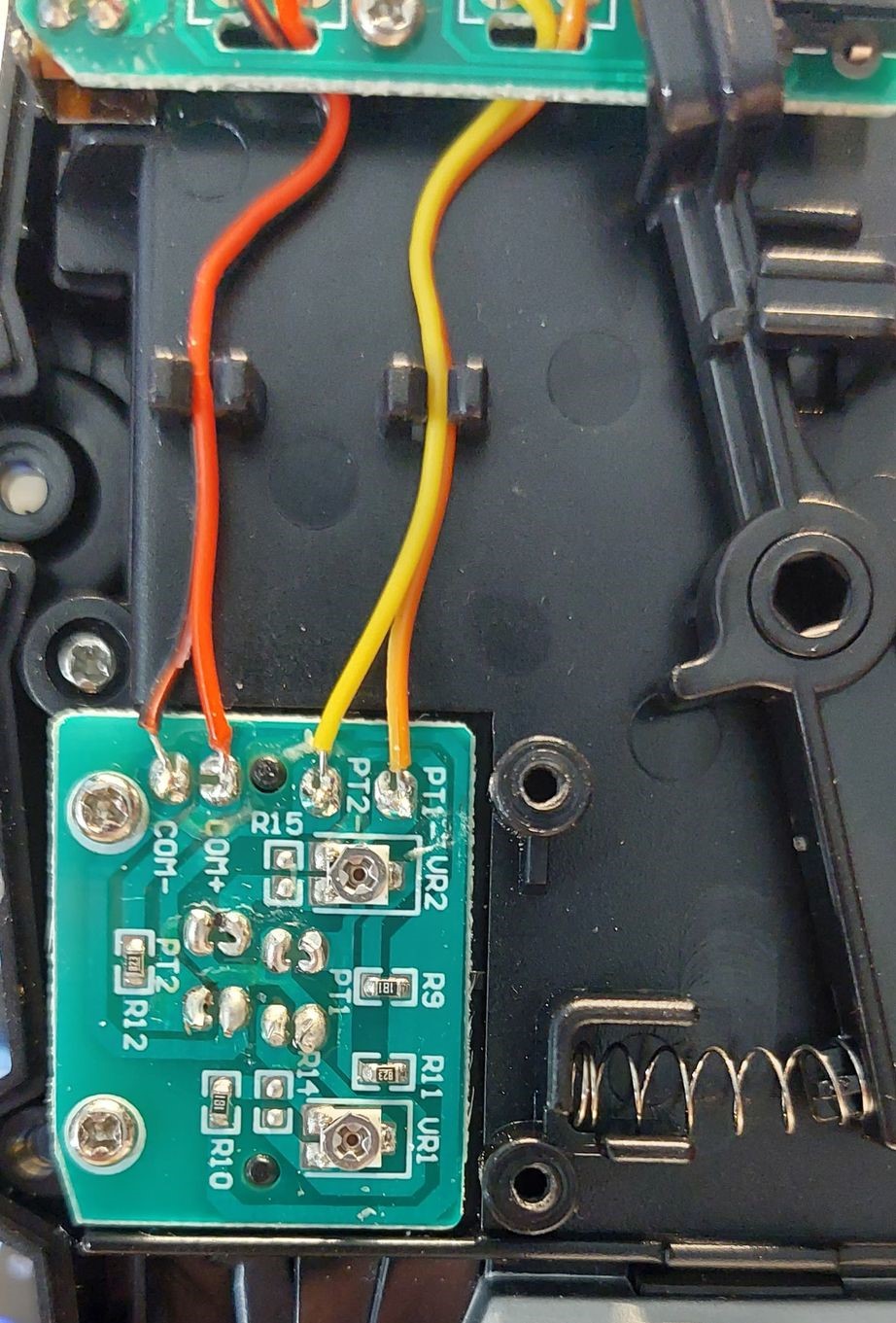

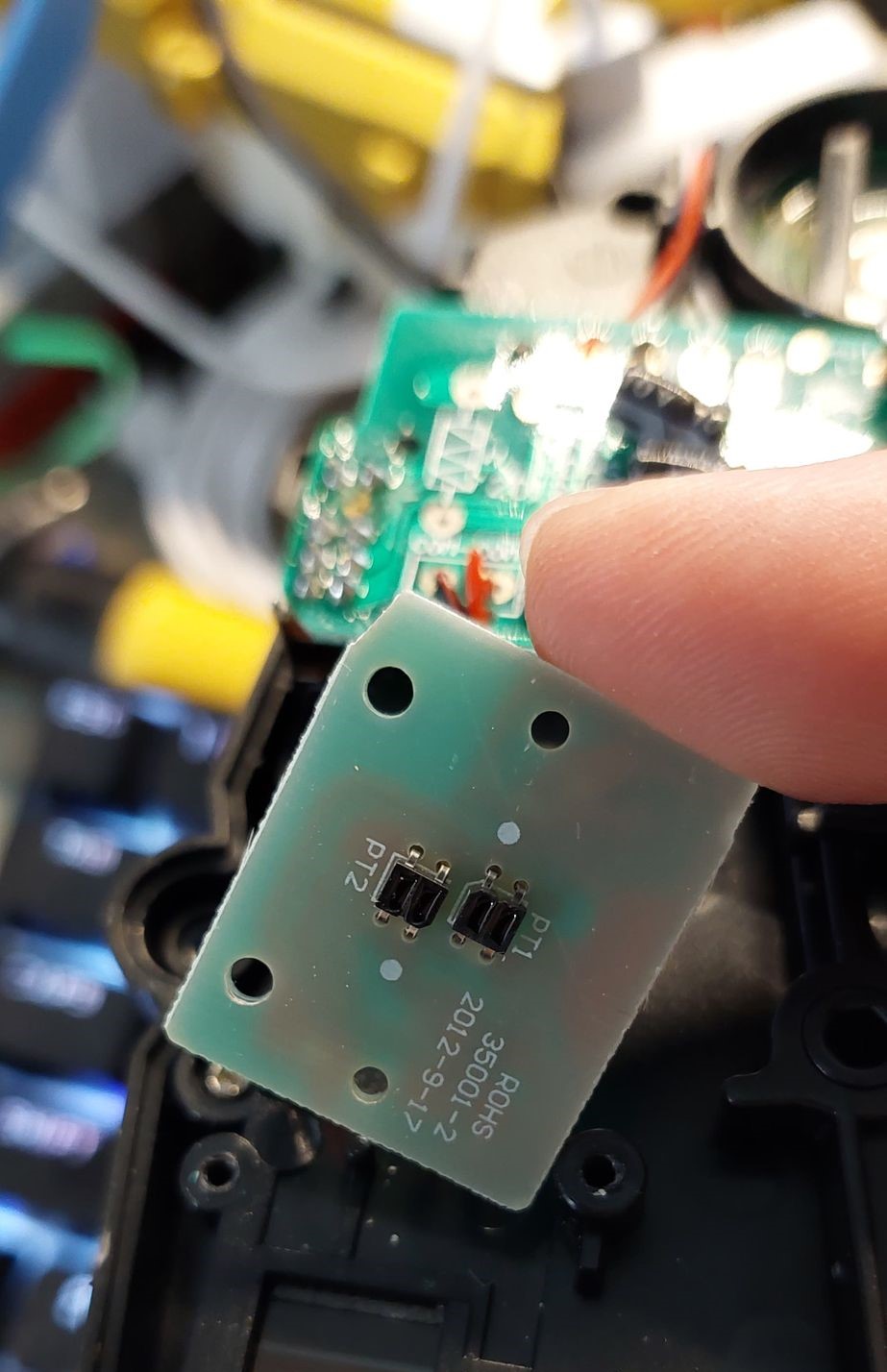

I found out recently that both US and Japanese version of the Goseiger (天装戦隊ゴセイジャー) henshin toy Tensouder (テンソウダー) uses 2 QRE1113 to read the double decked barcode on the side.

Tensouder

QRE1113 IR reflectance sensor and ESP8266 example:

http://www.esp8266learning.com/qre1113-ir-reflectance-sensor-and-esp8266-example.php

Barcode Binary Card Reader

https://hackaday.io/project/9129-barcode-binary-card-reader

Leap Motion

Documentation:

https://developer-archive.leapmotion.com/documentation/v2/unity/index.html

System Requirements

- Leap Motion 2.3.1+

- Unity 5.1+

- Windows 7, Windows 8, Mac OS X

Installation

- Download the latest asset package from: https://developer.leapmotion.com/downloads/unity.

- Open or create a project.

- Select the Unity Assets > Import Package > Custom Package menu command.

- Locate the downloaded asset package and click Open.

- The assets are imported into your project.

Every development and client computer must also install the Leap Motion service software (which runs automatically after it is installed).

Using Processing

You can use the Leap Motion Java libraries in a Processing Sketch (in Java mode). This involves adding the Leap Motion files to the Processing libraries folder and importing the Leap Motion classes into the Sketch.

Setting Up the Leap Motion Libraries

To put the Leap Motion Java libraries in the Processing libraries folder, do the following:

- Locate and open your Sketchbook folder. (The path do this folder is listed in the Processing Preferences dialog.)

- Find the folder named

librariesin the Sketchbook folder, if it exists. Create the folder, if necessary. - Inside

libraries, create a folder named,LeapJava. - Inside

LeapJava, create a folder named,library.

5. Find your LeapSDK folder (wherever you copied it after downloading). 5. Copy the following 3 library files from LeapSDK/lib to LeapJava/library

| Mac OS X | LeapJava.jar | libLeapJava.dylib | libLeap.dylib |

| Windows 32bit | LeapJava.jar | x86/LeapJava.dll | x86/Leap.dll |

| WIndows 64bit | LeapJava.jar | x86/LeapJava.dll | x86/Leap.dll |

Processing 3.5.4

Library Dependencies:

Leap Motion Software v2 (2.3.1+31549)

https://developer-archive.leapmotion.com/v2?id=skeletal-beta&platform=windows&version=2.3.1.31549

https://developer-archive.leapmotion.com/unity

https://grasshopper-kale-khsa.squarespace.com/tracking-software-download

QUICK SETUP GUIDE – UNITY PACKAGE FILES (.UNITYPACKAGE)

If you prefer you can get the Ultraleap Hand Tracking Plugin for Unity using .unitypackage files. This can be helpful if you need to modify the package content. Please note that for future releases .unitypackage files will need to be updated manually.

- Ensure that you have the Ultraleap Hand Tracking Software (V5.2+) installed.

- Remove any existing Ultraleap Unity modules from your project. If you need to do this, we strongly recommend you read our guide to Upgrading to the Unity Plugin from Unity Modules.

- Download the Unity Modules package.

- Right-click in the Assets window, go to Import Package and left-click Custom Package.

- Find the Tracking.unitypackage and import it. This includes Core, Interaction Engine, and the Hands Module.

- Optionally import:

- the Tracking Examples.unitypackage for example content

- the Tracking Preview.unitypackage and Preview Examples.unitypackage for experimental content and examples. This can go through many changes before it is ready to be fully supported. At some point in the future, preview content might be promoted to the stable package, however it might also be deprecated instead. Because there is no guarantee for future support, we do not recommend using preview packages in production.

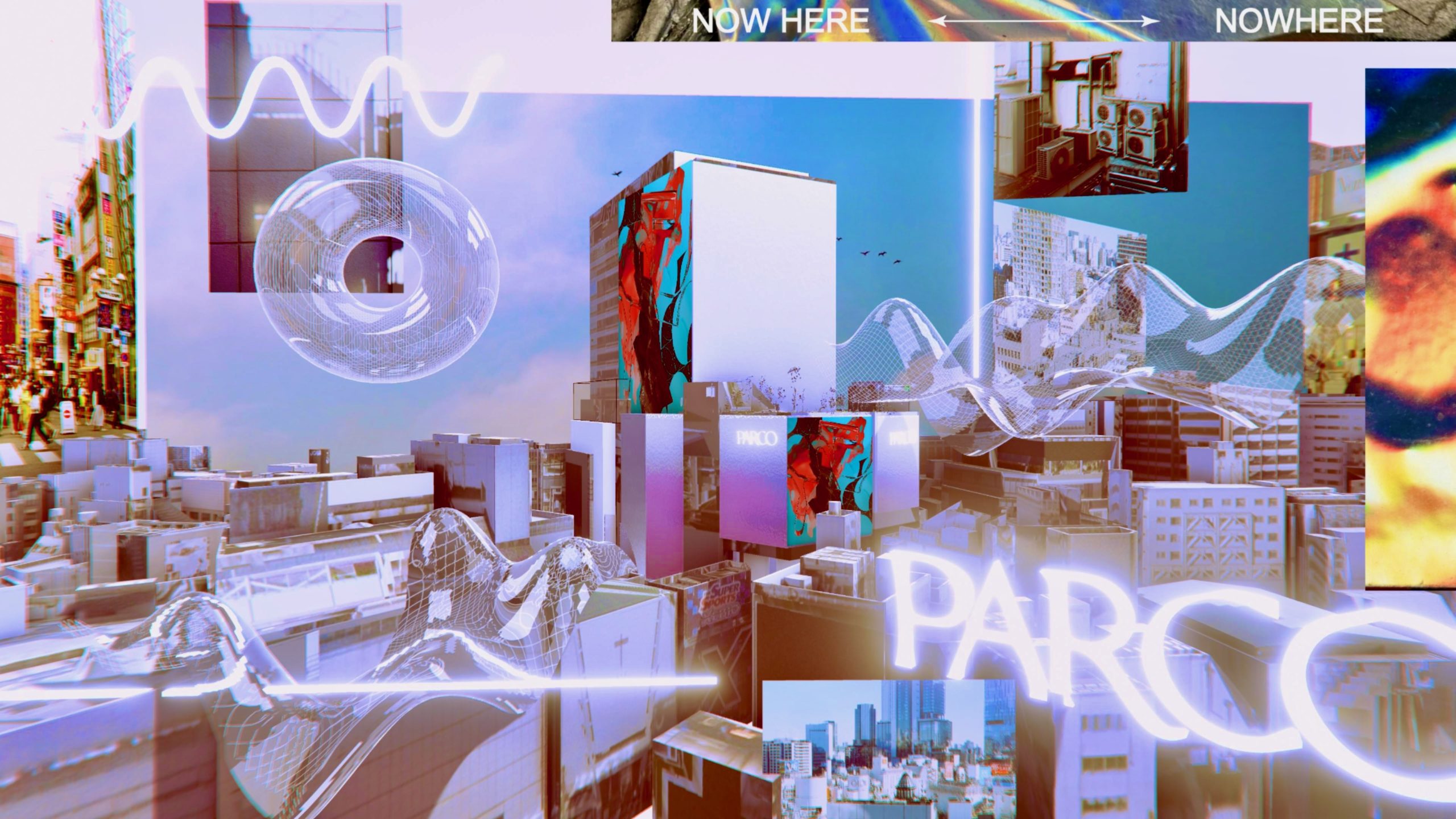

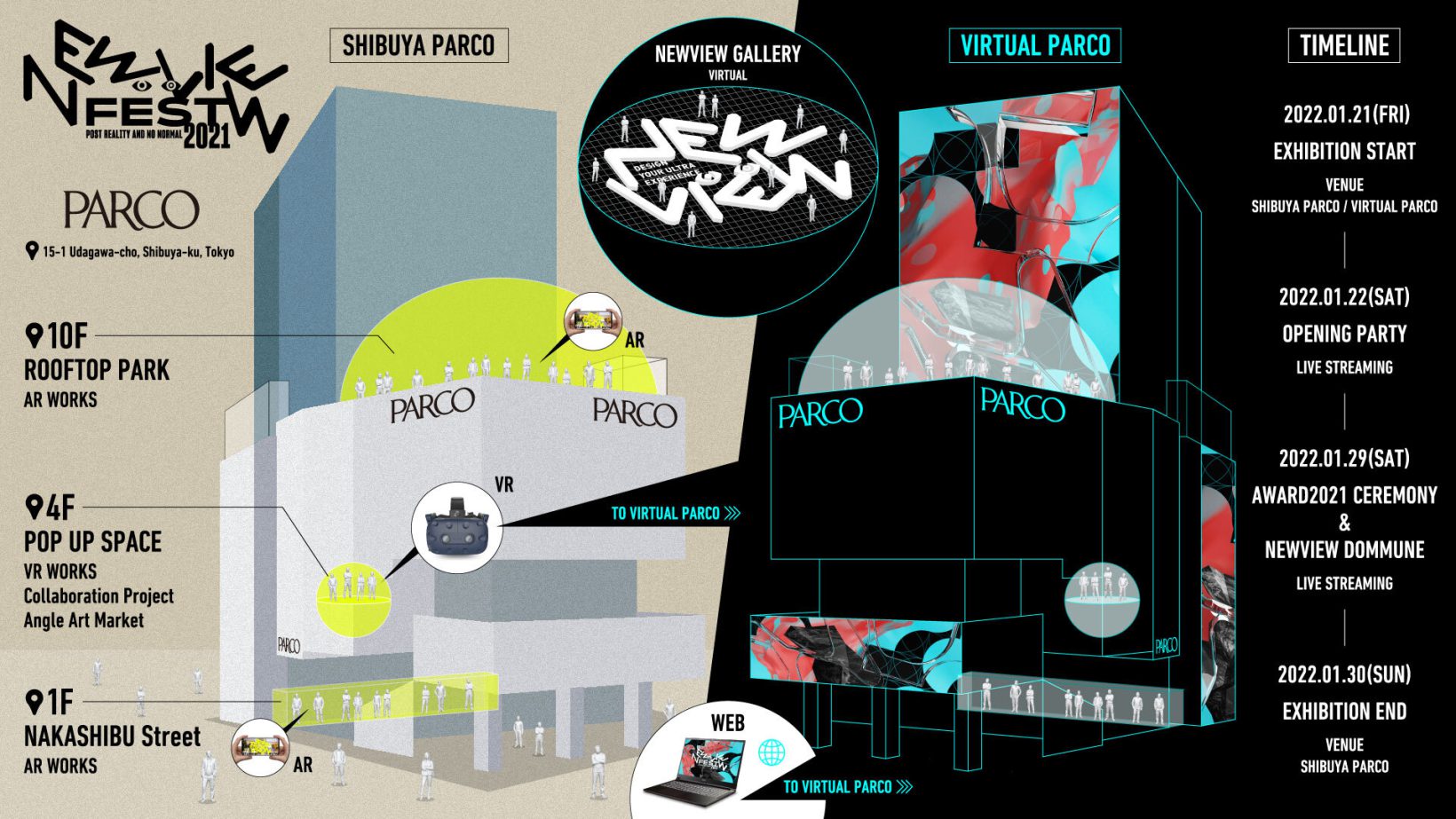

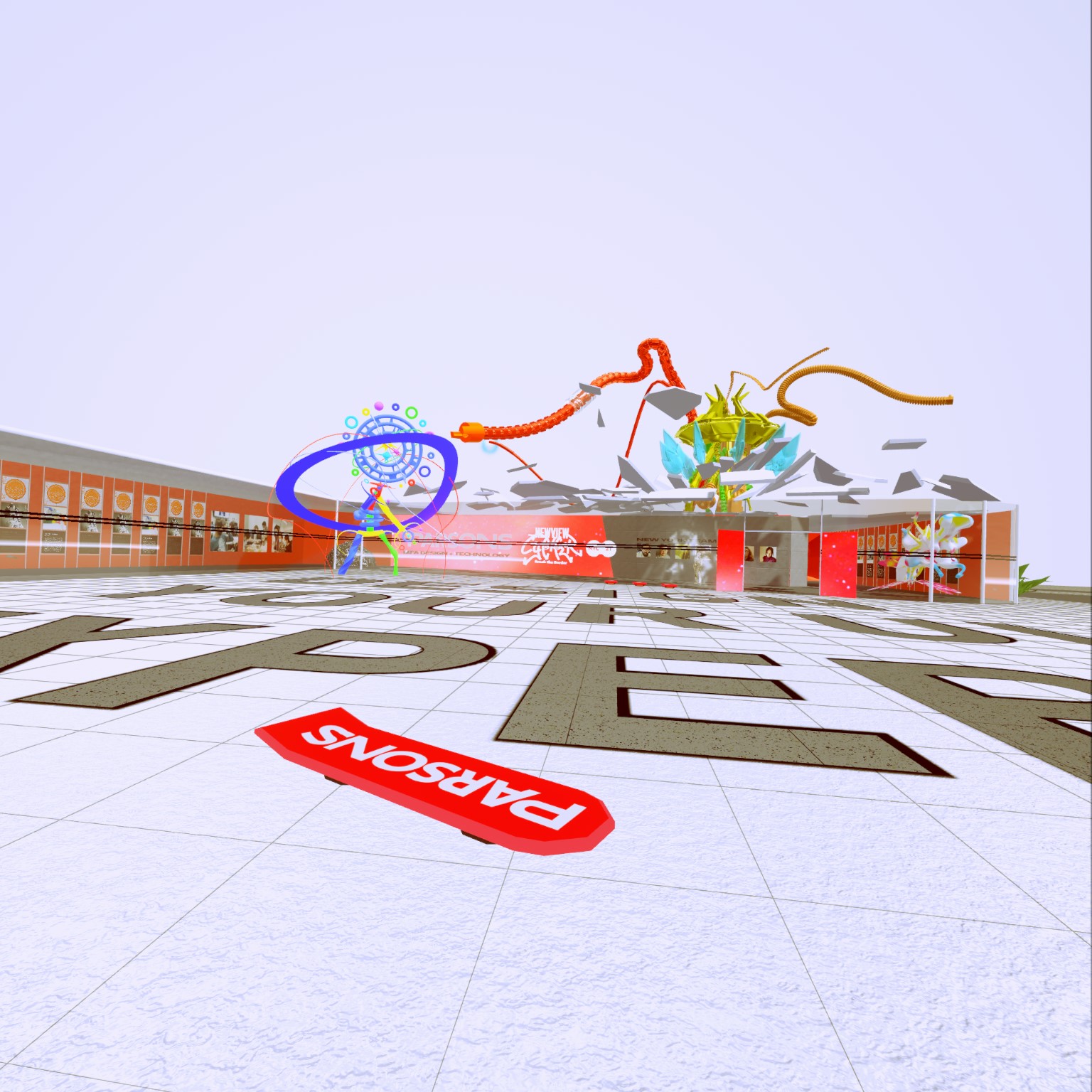

NEWVIEW AWARDS 2021

Last year was an exciting year, first I was invited to teach at NEWVIEW SCHOOL TAIPEI. Besides redesigning the curriculum, I worked with a group of brilliant designers and technologists and designed a M5 extension and framework that allow users to create alternative controller with very minimum programming. Here are some of the controllers I made using the framework.

When I found out NEWSCHOOL is interested in coming to NYC, I immediately proposed to have it at Parsons School of Design. At this point, I didn’t know one of the students in this collaboration studio will win the grant prize of the NEWVIEW Award 2021. I was just really excited that I can officially bring this code-less VR/AR/XR pipeline to my students at the Design and Technology program.

“One way to look for innovation in game design is to have non-game designers make games.” This is a saying that circulates in the indie game community for a long time. I believe spirit behind this saying is also the key to find the ultimate content type(s) that will help VR technology earn a place in our daily life. As a faulty at Parsons, I have been looking for ways to bring our artist and designer students to take their original content into virtual space for a spin. I co-created the first VR experiment course at Parsons called Recursive Reality in late 2013. This course and another popular course at our program, the New Arcade, became the curriculum barebone of this collaboration studio: NEWVIEW SCHOOL.

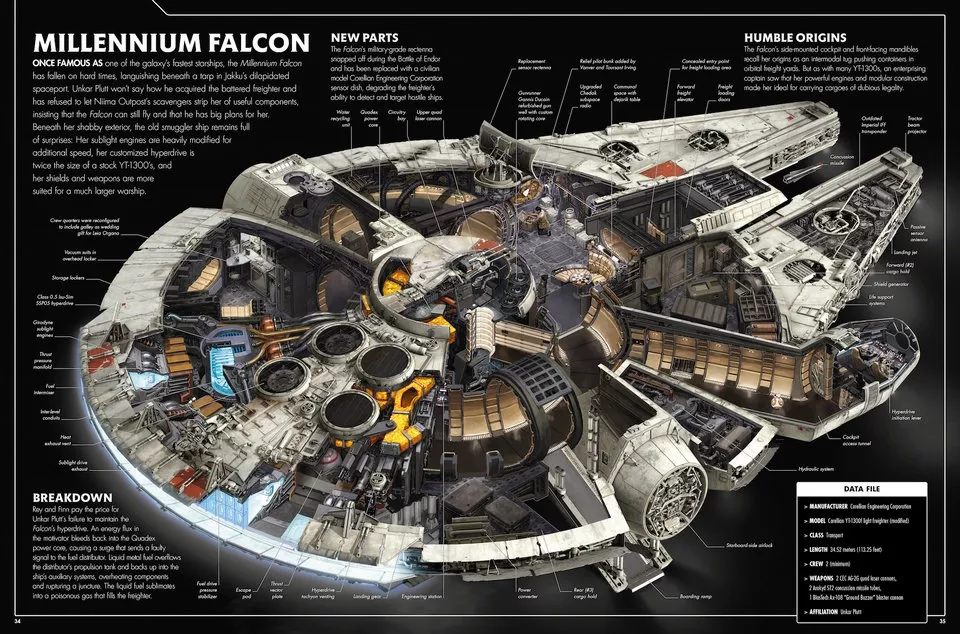

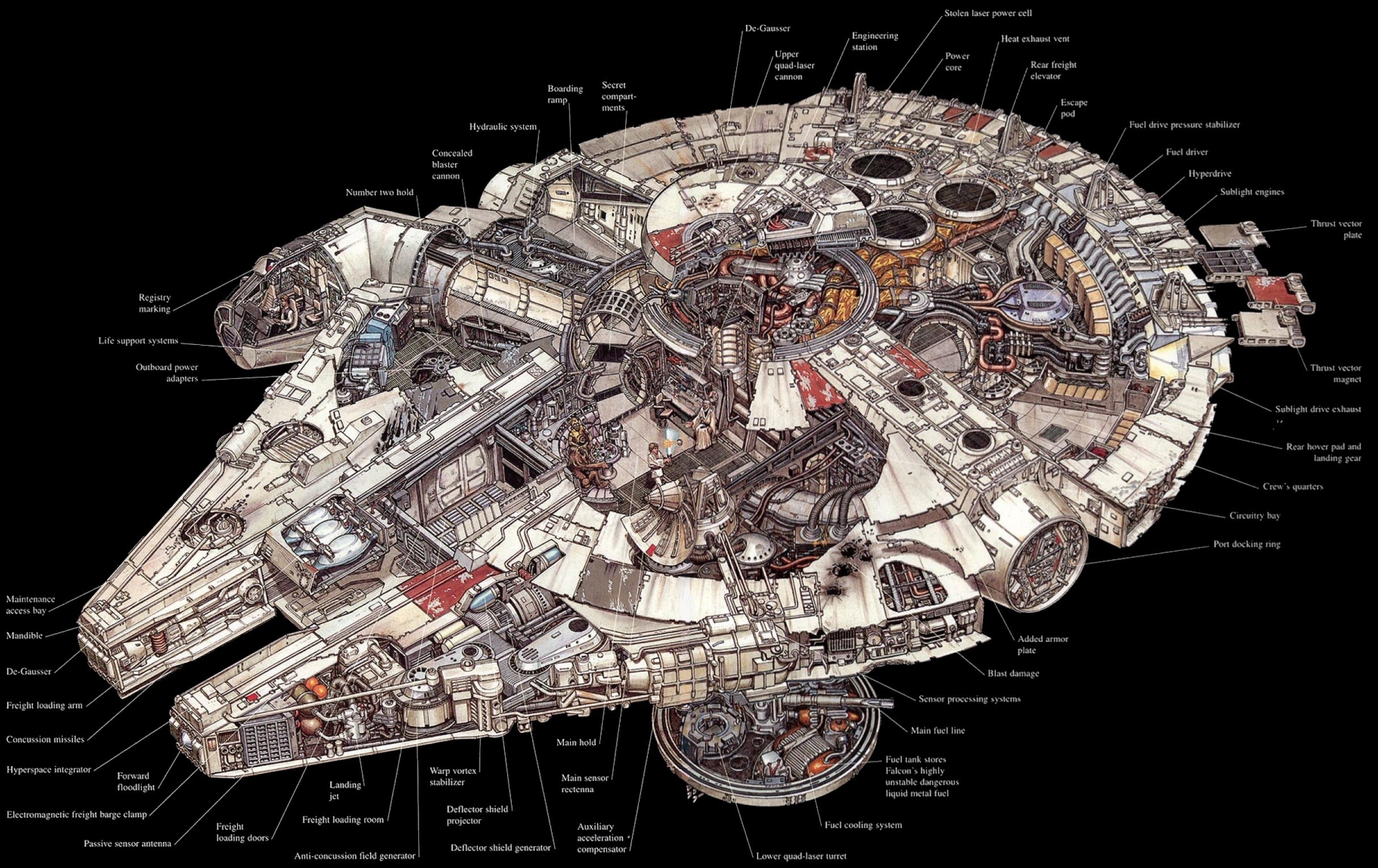

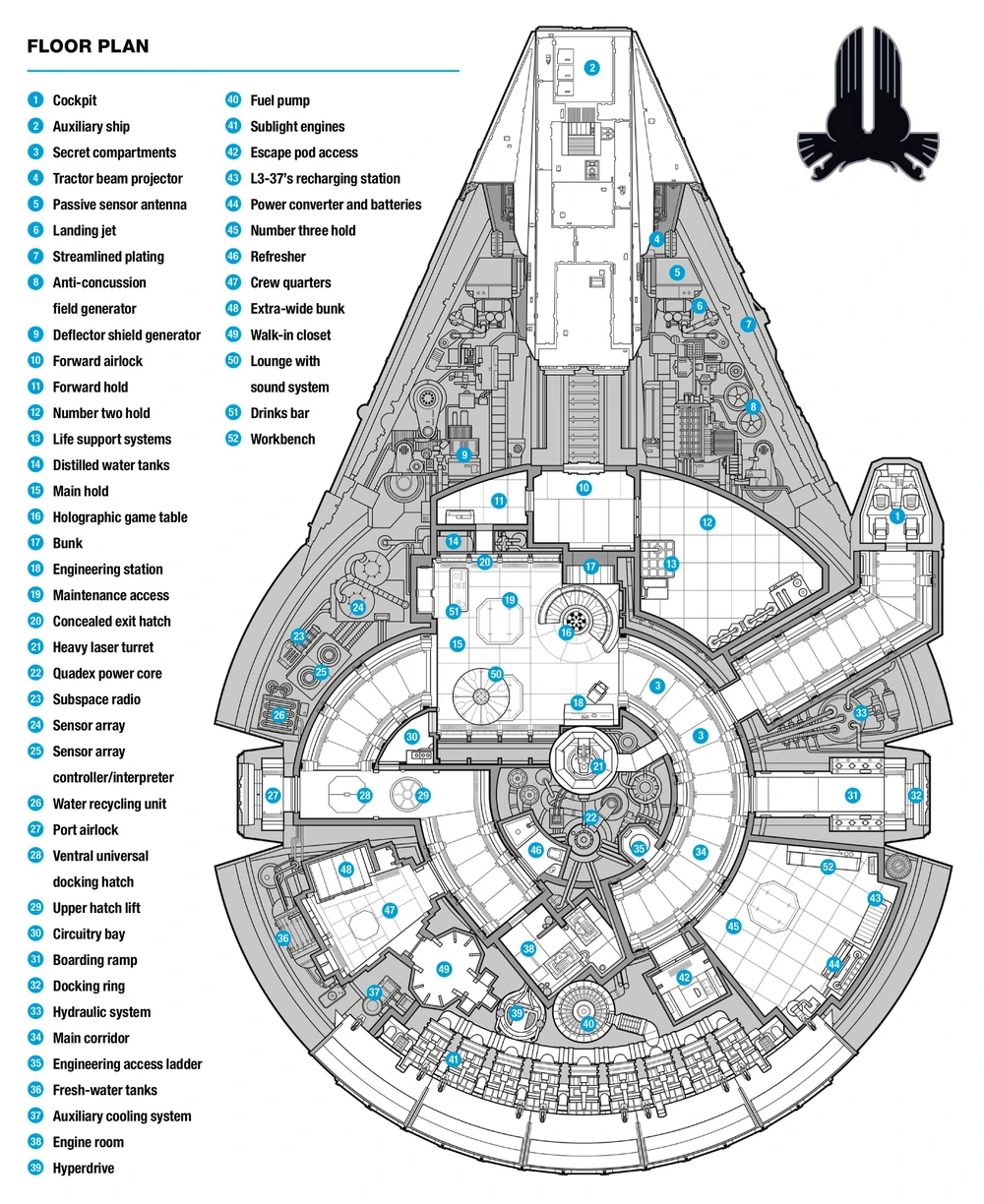

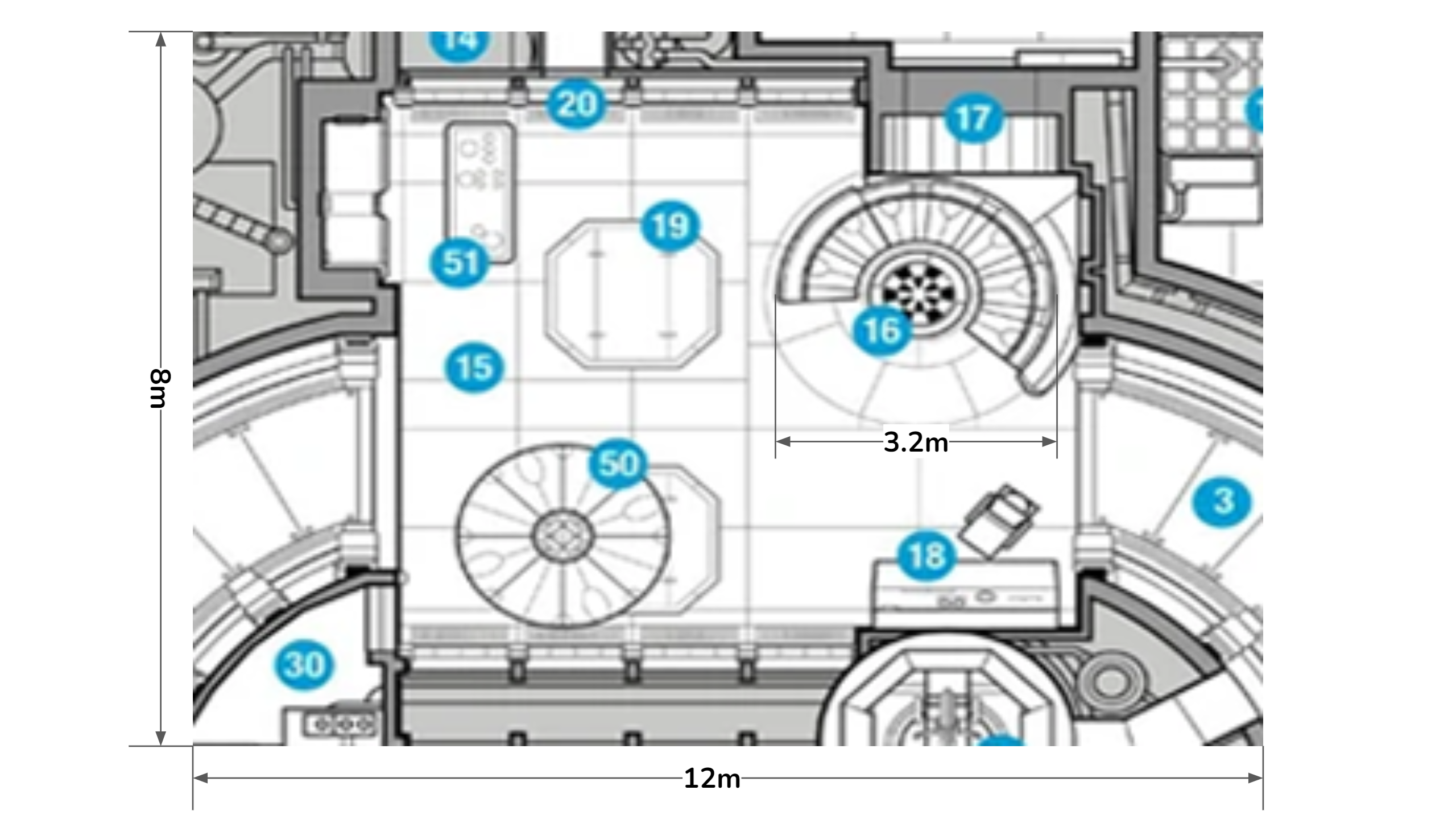

The Millennium Falcon

Girl Gun Lady

ガールガンレディ

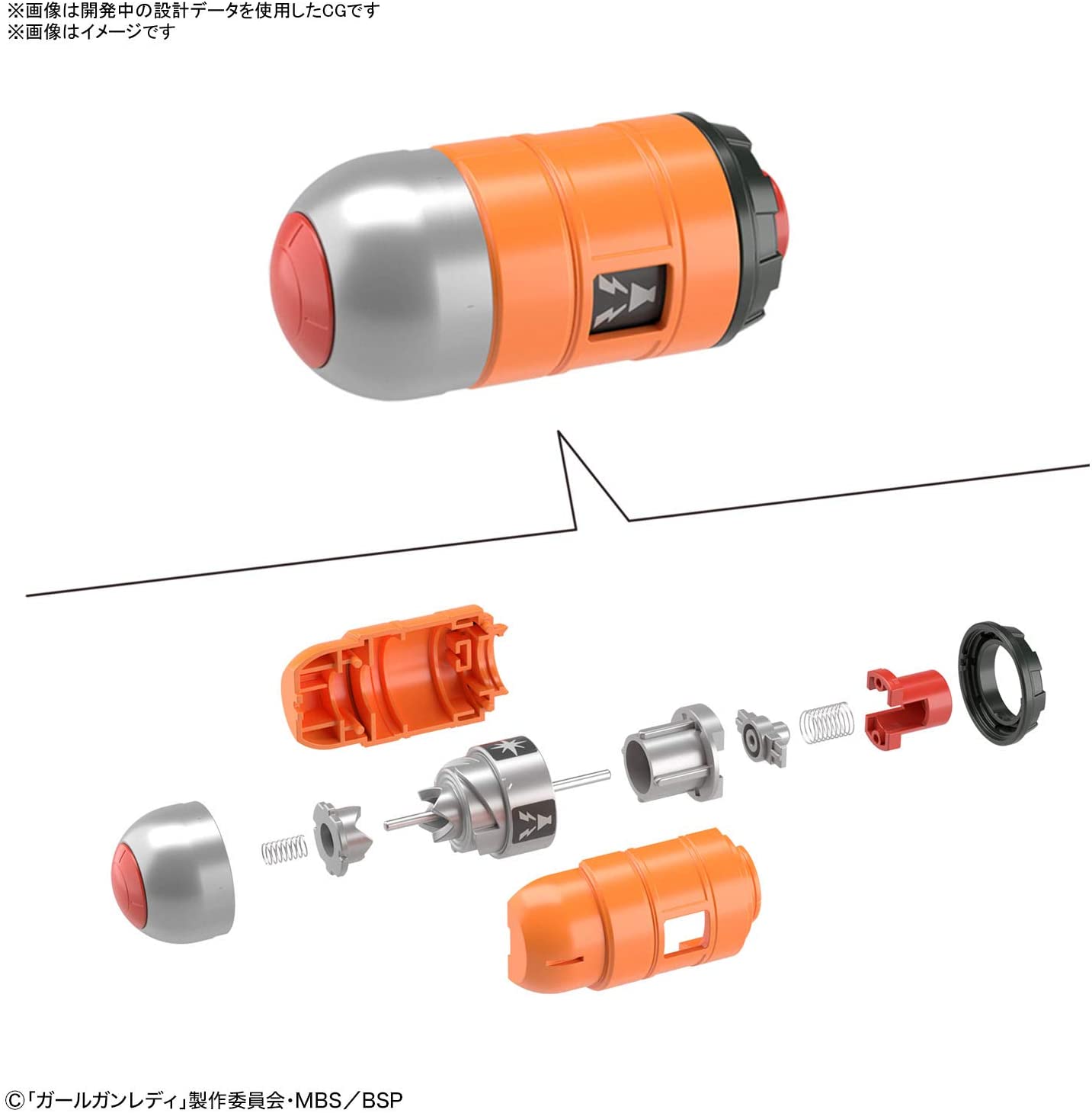

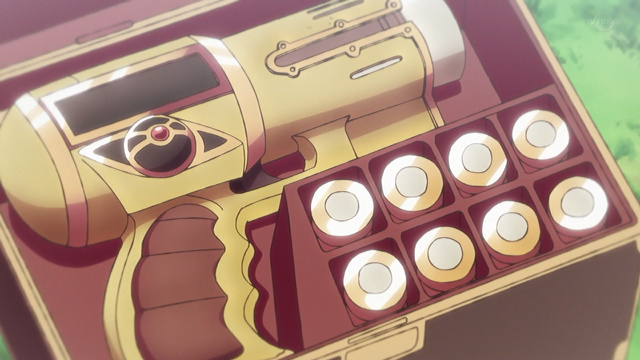

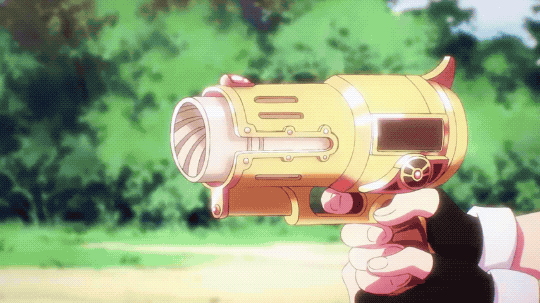

Girl Gun Lady is a Japanese live action sci-fi TV drama. I am particularly fascinated by the digital weapons designed for this drama. All of the weapons including the Gun Ladies are available in plastic model kits. I am having a blast building some of them. My favorite weapon is the Alpha Tango. It is the size of a hand pistol but functions like a grenade launcher and the grenade ammo can be programmed to do different things.

Alpha Tango also reminds me of Maam’s Magic Bullet Gun (魔弾銃 まだんがン ) – Dragon Quest: Adventure of Dai which is another favorite sci-fi weapon of mine from childhood.

Picking the right bullet for the situation is an interesting game mechanic to explore. Judge Dredd’s Lawgiver is another fun(?) example. I did a voice-activated light gun project in early 2007 which was inspired by the Lawgiver in Sylvester Stallone’s Judge Dredd (1995).

This should be my next data relic. Meanwhile, did a quick study on Maam’s Magic Bullet Gun in Tinkercad.

Also modified the Oculus Quest 2 Controller Pistol Grip (https://www.thingiverse.com/thing:4760656) to work with M5Stack. This could be great for voice-activated weapon using Google Assistant. The grip file I downloaded directly from the Thingiverse doesn’t fit, I couldn’t push the grip all the way up like shown in the pictures. I used Tinkercad to make the hole bigger with a +1% scaled model of a Quest 2 controller. After that adjustment, it fits smoothly.

Going back to the voice inputs. My experiment with both Watson and Google Assistant shows that there is a significant delay on speech to text response. It gets worse with slow internet connection. I had a hard time demonstrate projects using speech to text (cloud) service in demo day event and conference in the past. What can be done in UX to make that passage of time felt shorter – less significant? Slow-motion? Well, there is only one way to find out.